Accountants’ tricks can help identify cheating scientists, says new St Andrews research

Auditing practices from the finance industry can be adapted to identify academic fraud, according to new research by the University of St Andrews.

In a paper published in the journal Research Integrity and Peer Review (Tuesday 11 April), the authors show how effective statistical tools can be employed by those who review scientific studies to help detect and investigate suspect data.

When scientists publish their latest discoveries in journals, sometimes the papers are retracted after being published. This can happen because corrections are needed, or because of concerns that the research involved may not have been carried out properly, or even that data has been manipulated or fabricated.

Retractions of scientific papers neared 5000 globally in 2022 according to Retraction Watch, amounting to almost 0.1% of published articles. Although uncommon, cases of scientific fraud have a disproportionate impact on public trust in science.

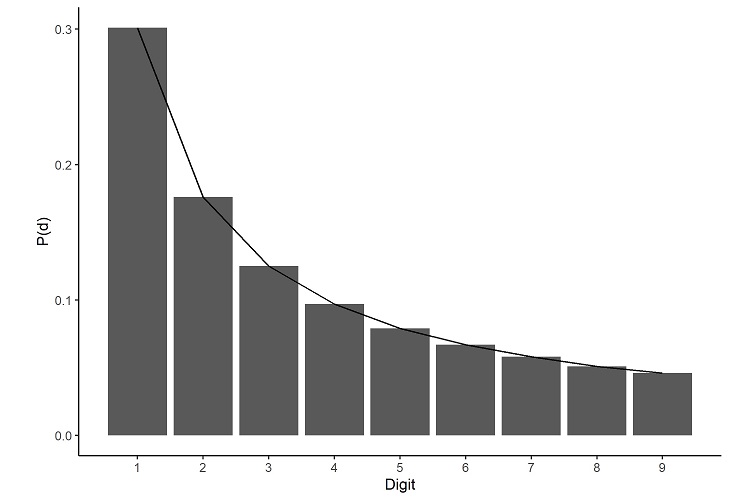

Taking inspiration from well-established financial auditing practices, researchers recommend that fraud controls within scientific institutions and publishers be improved to weed out fraudsters more effectively. The paper looks at Benford’s Law as a means of examining the relative frequency distribution for leading digits of numbers in datasets, which is used in the practice of professional auditing.

Since the inception of recorded science, historic accounts suggest that fraud has existed. The issue has been brought increasingly to the fore in recent decades: from the study published in The Lancet linking the vaccine against measles, mumps and rubella to autism, to the recent accusations of scientific deception levied against the president of Stanford University, more and more high-profile cases of potential fraud appear to be occurring. Whilst the reasons for the rise in cases of potential fraud are not entirely clear, it is apparent that controls within scientific institutions and publishers could be strengthened.

The increase in article retractions comes at a time when societal confidence in science has already been shaken by the suggestions of prominent figures that scientific facts are “fake news”.

Gregory Eckhartt, the paper’s lead author, said: “It is time to empower individuals and institutions to separate scientific fact from fiction. With some relatively simple statistical tools, anyone can go and question the truthfulness of many sets of data.”

The trick behind these tools is that it is actually harder than you might imagine to fabricate essentially random numbers, like the last digit in everyone’s bank balances. Financial auditors have known this for a long time and have a variety of tools for looking at lists of numbers and highlighting ones that seem odd (and thus requiring investigation for fraud).

The authors hope this paper will serve as an introduction to such tools for anyone wishing to challenge the integrity of a dataset, not just in financial data, but in any field that generates lots of data.

Graeme Ruxton, Professor in the School of Biology at the University of St Andrews and co-author of the paper, said: “This enhanced scrutiny necessarily requires open access to data. We hope that this might be the starting point in discussing reforms at the institution level in the ways data are stored and verified.”

In the future then, we may see stricter controls over scientific data, with the possibility of data-checking software employing such statistical tools and machine learning algorithms not far behind.

However, Steven Shafer MD, Professor of Anaesthesiology, Perioperative and Pain Medicine at Stanford University, advises caution in how we interpret the sources of fraud: “I think serial misconduct is a form of mental illness. For these people, dishonesty is simply the obvious way to succeed in a system where the rest of us are unwise for assuming that people represent themselves honestly.”

Part of the problem, he says, stems from publication bias: “There are virtually no incentives for publishing papers that provide confirmation or refutation of previous studies.”

This emphasis placed on exciting results, which ultimately affects the success of scientists’ careers, may be a key factor to consider in future reforms.

Ultimately, the maintenance of truth in science benefits us all. Greg said: “Everyone in science needs to be open on harnessing new approaches to making science demonstrably more reliable. Science simply doesn’t work without widespread public trust in scientists.”

Figure legend: Graphical depiction of Benford’s Law as applied to the first digits of a notional dataset that perfectly fits the law, displaying the characteristic negative logarithmic curve of occurrence probability, P(d), as the digit value increases.

The paper ‘Investigating and preventing scientific misconduct using Benford’s Law’ is published in the Research Integrity and Peer Review and is available online.

Please ensure that the paper’s DOI (doi.org10.1186/s41073-022-00126-w) is included in all online stories and social media posts and that Research Integrity and Peer Review is credited as the source.

Issued by the University of St Andrews Communications Office.